文章目录

前言

一、环境

- 这里新添加了一台master,两台负载均衡节点

| 服务器 | 主机名 | IP地址 | 主要组件 |

|---|---|---|---|

| k8s集群master01 + etcd01 | master01 | 192.168.3.11 | kube-apiserver kube-controller-manager kube-schedular etcd |

| k8s集群master02 | master02 | 192.168.3.21 | kube-apiserver kube-controller-manager kube-schedular |

| k8s集群node01 + etcd02 | node01 | 192.168.3.12 | kubelet kube-proxy docker flannel |

| k8s集群node02 + etcd03 | node02 | 192.168.3.13 | kubelet kube-proxy docker flannel |

| nginx01 | nginx01 | 192.168.3.32 | keepalived 负载均衡(主) |

| nginx02 | nginx02 | 192.168.3.33 | keepalived 负载均衡(备) |

1. master02

[root@localhost ~]# hostnamectl set-hostname master02

[root@localhost ~]# su

[root@master02 ~]# cat >> /etc/hosts << EOF

192.168.3.11 master01

192.168.3.12 node01

192.168.3.13 node02

192.168.3.21 master02

192.168.3.32 nginx01

192.168.3.33 nginx02

EOF

[root@master02 ~]# systemctl disable --now firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@master02 ~]# setenforce 0

[root@master02 ~]# sed -i 's/enforcing/disabled/' /etc/selinux/config

[root@master02 ~]# swapoff -a

[root@master02 ~]# sed -ri 's/.*swap.*/#&/' /etc/fstab

[root@master02 ~]# cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

[root@master02 ~]# sysctl --system

......

[root@master02 ~]# yum install -y ntpdate

[root@master02 ~]# ntpdate time.windows.com

......

2. nginx 主机(nginx01/02)

[root@c7-5 ~]# hostnamectl set-hostname nginx01

[root@c7-5 ~]# su

[root@nginx01 ~]# systemctl disable --now firewalld

[root@nginx01 ~]# setenforce 0

[root@nginx01 ~]# sed -i 's/enforcing/disabled/' /etc/selinux/config

[root@nginx01 ~]# cat >> /etc/hosts << EOF

192.168.3.11 master01

192.168.3.12 node01

192.168.3.13 node02

192.168.3.21 master02

192.168.3.32 nginx01

192.168.3.33 nginx02

EOF

二、master02部署

1.拷贝文件

- 从 master01 节点上拷贝证书文件、各 master 组件的配置文件和服务管理文件到 master02 节点。

master01

[root@master01 ~]# scp -r /opt/etcd/ root@192.168.3.21:/opt/

......

[root@master01 ~]# scp -r /opt/kubernetes/ root@192.168.3.21:/opt/

......

[root@master01 ~]# cd /usr/lib/systemd/system

[root@master01 /usr/lib/systemd/system]# scp kube-apiserver.service kube-controller-manager.service kube-scheduler.service root@192.168.3.21:`pwd`

......

2. 修改配置文件 kube-apiserver 中的 IP

master02

[root@master02 ~]# vim /opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://192.168.10.100:2379,https://192.168.10.101:2379,https://192.168.10.102:2379 \

--bind-address=192.168.3.21 \ ##监听地址修改为 master02 主机 IP

--secure-port=6443 \

--advertise-address=192.168.3.21 \ ##广告地址修改为 master02 主机 IP

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--kubelet-https=true \

--enable-bootstrap-token-auth \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/opt/kubernetes/ssl/apiserver.pem \

--tls-private-key-file=/opt/kubernetes/ssl/apiserver-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem"

3. 启动各服务并设置开机自启

3.1 启动

[root@master02 ~]# systemctl enable --now kube-apiserver.service

......

[root@master02 ~]# systemctl enable --now kube-controller-manager.service

......

[root@master02 ~]# systemctl enable --now kube-scheduler.service

......

3.2 查看状态

[root@master02 ~]# systemctl status kube-apiserver.service

......

[root@master02 ~]# systemctl status kube-controller-manager.service

......

[root@master02 ~]# systemctl status kube-scheduler.service

......

4. 查看 node 节点状态

master02

[root@master02 ~]# ln -s /opt/kubernetes/bin/* /usr/local/bin/

[root@master02 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.3.12 Ready <none> 43h v1.12.3

192.168.3.13 Ready <none> 43h v1.12.3

[root@master02 ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

192.168.3.12 Ready <none> 43h v1.12.3 192.168.3.12 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://20.10.12

192.168.3.13 Ready <none> 43h v1.12.3 192.168.3.13 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://20.10.12

-o wide:额外输出信息,对于 Pod,将输出 Pod 所在的 Node 名。此时在 master02 节点查看到的 node 节点状态仅是从 etcd 查询到的信息,而此时 node 节点实际上并未与 master02 节点建立通信连接,因此需要使用一个 VIP 把 node 节点与 master02 节点关联起来。

三、负载均衡部署(nginx 主机;nginx01 和 02 操作相同)

配置 load balancer 集群双机热备负载均衡(nginx 实现负载均衡,keepalived 实现双机热备)

1.下载nginx

- 配置 nginx 的官方在线 yum 源,配置本地 nginx 的 yum 源

- nginx01 与 nginx02 操作相同

[root@nginx01 ~]# cat > /etc/yum.repos.d/nginx.repo << 'EOF'

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/$basearch/

gpgcheck=0

EOF

[root@nginx01 ~]# yum install -y nginx

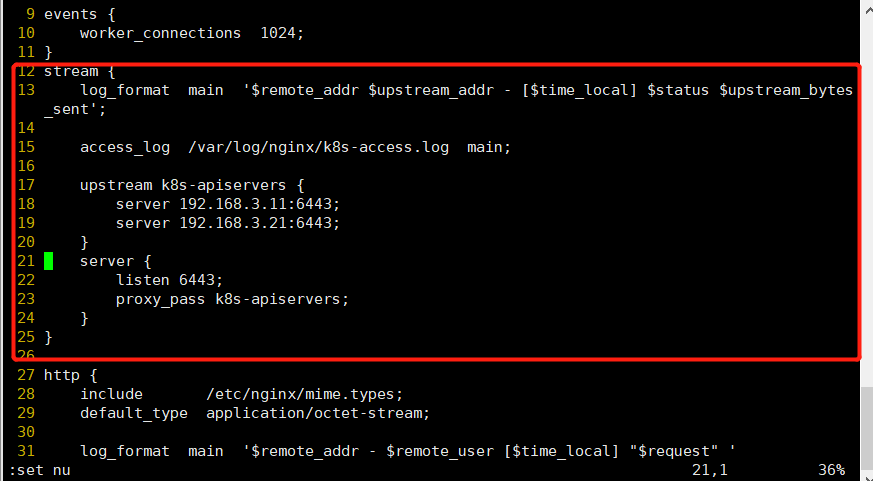

2. 修改 nginx 配置文件

配置四层反向代理负载均衡,指定 k8s 集群 2 台 master 的节点 ip 和 6443端口

nginx01 与 nginx02 操作相同

[root@nginx01 ~]# vim /etc/nginx/nginx.conf

......

events {

worker_connections 1024;

}

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiservers {

server 192.168.3.11:6443;

server 192.168.3.21:6443;

}

server {

listen 6443;

proxy_pass k8s-apiservers;

}

}

http {

......

3.启动 nginx

3.1 检查配置文件语法

[root@nginx01 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

3.2 启动 nginx

[root@nginx01 ~]# systemctl enable --now nginx

Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service.

3.3 查看状态

[root@nginx01 ~]# systemctl status nginx

● nginx.service - nginx - high performance web server

Loaded: loaded (/usr/lib/systemd/system/nginx.service; enabled; vendor preset: disabled)

Active: active (running) since 五 2021-12-17 14:29:12 CST; 18s ago

Docs: http://nginx.org/en/docs/

Process: 44868 ExecStart=/usr/sbin/nginx -c /etc/nginx/nginx.conf (code=exited, status=0/SUC

CESS) Main PID: 44869 (nginx)

CGroup: /system.slice/nginx.service

├─44869 nginx: master process /usr/sbin/nginx -c /etc/nginx/nginx.conf

├─44870 nginx: worker process

├─44871 nginx: worker process

├─44872 nginx: worker process

└─44873 nginx: worker process

12月 17 14:29:12 nginx01 systemd[1]: Starting nginx - high performance web server...

12月 17 14:29:12 nginx01 systemd[1]: Started nginx - high performance web server.

[root@nginx01 ~]# netstat -natp | grep nginx

tcp 0 0 0.0.0.0:6443 0.0.0.0:* LISTEN 44869/nginx: master

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 44869/nginx: master

四、部署 keepalived 服务

1.下载 keepalived

- 01 与 02 都下载

[root@nginx01 ~]# yum install -y keepalived

2. 修改 keepalived 配置文件

nginx01 配置

[root@nginx01 ~]# vim /etc/keepalived/keepalived.conf

##10行,修改

smtp_server 127.0.0.1

##12行,修改,nginx01节点的为NGINX_MASTER,nginx02节点的为NGINX_BACKUP

router_id NGINX_MASTER

##13-16行,删除

}

##14行,插入一个周期性执行的脚本

vrrp_script check_nginx {

##15行,指定检查nginx存活的脚本路径

script "/etc/nginx/check_nginx.sh"

}

##18行,修改,nginx01节点的为MASTER,nginx02节点的为BACKUP

state MASTER

##19行,修改,指定网卡名称ens33

interface ens33

##20行,指定vrid,两个节点要保持一致

virtual_router_id 51

##21行,nginx01节点的为100,nginx02节点的为90

priority 100

##27行,修改,指定VIP

virtual_ipaddress {

192.168.3.31/24

}

##30行,添加,指定vrrp_script配置的脚本

track_script {

check_nginx

}

}

##删除剩余无用配置

--------------------------------------------------------------------

#nginx01-keepalived 配置

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/nginx/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.3.31

}

track_script {

check_nginx

}

}

nginx02配置

[root@nginx02 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_BACKUP

}

vrrp_script check_nginx {

script "/etc/nginx/check_nginx.sh"

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.3.31/24

}

track_script {

check_nginx

}

}

3. 创建 nginx 状态检查脚本

[root@nginx01 ~]# vim /etc/nginx/check_nginx.sh

#!/bin/bash

#egrep -cv "grep|$$"用于过滤掉包含grep或者$$表示的当前shell进程ID

count=$(ps -ef | grep nginx | egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

>>>wq

[root@nginx01 ~]# chmod +x /etc/nginx/check_nginx.sh

[root@nginx01 ~]# scp /etc/nginx/check_nginx.sh root@192.168.3.33:/etc/nginx/check_nginx.sh

4. 启动 keepalived 服务

一定要先启动了 nginx 服务,再启动 keepalived 服务

[root@nginx01 ~]# systemctl enable --now keepalived.service

......

[root@nginx01 ~]# systemctl status keepalived.service

......

Active: active (running) since 五 2021-12-17 16:38:46 CST; 4s ago

......

5. nginx01 中查看 VIP

[root@nginx01 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:f5:cf:df brd ff:ff:ff:ff:ff:ff

inet 192.168.3.32/24 brd 192.168.3.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.3.31/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::1185:9499:4df9:4e60/64 scope link

valid_lft forever preferred_lft forever

6. 修改 node 节点对接 IP(node 节点,以 node01 为例)

6.1 修改 node 节点配置文件

修改 node 节点上的 bootstrap.kubeconfig、kubelet.kubeconfig 和 kube-proxy.kubeconfig 配置文件中的 server 为 VIP

两个node节点操作相同

[root@node01 ~]# cd /opt/kubernetes/cfg/

[root@node01 cfg]# vim bootstrap.kubeconfig

##5行,修改IP为VIP

server: https://192.168.3.31:6443

>>>>wq

[root@node01 cfg]# vim kubelet.kubeconfig

##5行,修改IP为VIP

server: https://192.168.3.31:6443

>>>>wq

[root@node01 cfg]# vim kube-proxy.kubeconfig

##5行,修改IP为VIP

server: https://192.168.3.31:6443

6.2 重启 kubelet 和 kube-proxy 服务

node01 和 node02 操作相同

[root@node02 cfg]# systemctl restart kubelet.service

[root@node02 cfg]# systemctl restart kube-proxy.service

7.在 nginx01 查看 nginx 和 node、master 节点的连接状态

[root@nginx01 ~]# netstat -natp | grep nginx

tcp 0 0 0.0.0.0:6443 0.0.0.0:* LISTEN 44869/nginx: master

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 44869/nginx: master

tcp 0 0 192.168.3.32:45990 192.168.3.21:6443 ESTABLISHED 44871/nginx: worker

tcp 0 0 192.168.3.31:6443 192.168.3.13:38576 ESTABLISHED 44871/nginx: worker

tcp 0 0 192.168.3.32:33922 192.168.3.11:6443 ESTABLISHED 44871/nginx: worker

tcp 0 0 192.168.3.32:33916 192.168.3.11:6443 ESTABLISHED 44870/nginx: worker

tcp 0 0 192.168.3.31:6443 192.168.3.12:49812 ESTABLISHED 44871/nginx: worker

tcp 0 0 192.168.3.32:45998 192.168.3.21:6443 ESTABLISHED 44871/nginx: worker

tcp 0 0 192.168.3.31:6443 192.168.3.13:38562 ESTABLISHED 44871/nginx: worker

tcp 0 0 192.168.3.31:6443 192.168.3.12:49804 ESTABLISHED 44870/nginx: worker

本地 nginx 监听端口为 6443 和 80,6443 负责负载均衡代理,80 负责 web 展示服务。

VIP 的 6443 端口分别与 nginx01/nginx02 相连接。

master01/master02 的 6443 端口分别与 nginx01 相连接。

- 自此,多节点负载均衡搭建完毕。

五、验证使用 master02 创建 pod

1. 查看 pod、node 列表

[root@master02 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.3.12 Ready <none> 46h v1.12.3

192.168.3.13 Ready <none> 45h v1.12.3

[root@master02 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-test-7dc4f9dcc9-b5bqv 1/1 Running 0 45h

2. 创建 pod

[root@master02 ~]# kubectl create deploy nginx-master02-test --image=nginx

deployment.apps/nginx-master02-test created

[root@master02 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-master02-test-79fc886b76-mxt4d 0/1 ContainerCreating 0 5s

nginx-test-7dc4f9dcc9-b5bqv 1/1 Running 0 45h

#等待容器创建,可通过 "kubectl describe pod nginx-master02-test" 查看创建过程

[root@master02 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-master02-test-79fc886b76-mxt4d 1/1 Running 0 79s

nginx-test-7dc4f9dcc9-b5bqv 1/1 Running 0 45h

3. 查看使用 node

[root@master02 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

nginx-master02-test-79fc886b76-mxt4d 1/1 Running 0 107s 172.17.65.3 192.168.3.13 <none>

nginx-test-7dc4f9dcc9-b5bqv 1/1 Running 0 45h 172.17.8.3 192.168.3.12 <none>

成功使用 master02 创建 pod,并且通过 nginx 完成负载均衡

六、删除 Pod

1. 查看 k8s 全部资源

[root@master02 ~]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-master02-test-79fc886b76-mxt4d 1/1 Running 0 2m40s

pod/nginx-test-7dc4f9dcc9-b5bqv 1/1 Running 0 45h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 47h

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-master02-test 1 1 1 1 2m40s

deployment.apps/nginx-test 1 1 1 1 45h

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-master02-test-79fc886b76 1 1 1 2m40s

replicaset.apps/nginx-test-7dc4f9dcc9 1 1 1 45h

2. delete 删除 pod

[root@master02 ~]# kubectl delete pod nginx-master02-test-79fc886b76-mxt4d

pod "nginx-master02-test-79fc886b76-mxt4d" deleted

[root@master02 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-master02-test-79fc886b76-5xkpl 0/1 ContainerCreating 0 20s

nginx-test-7dc4f9dcc9-b5bqv 1/1 Running 0 45h

[root@master02 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-master02-test-79fc886b76-5xkpl 1/1 Running 0 41s

nginx-test-7dc4f9dcc9-b5bqv 1/1 Running 0 45h

- 由于 deployment 创建的 replicaset 管理着 pod 的数量,该 pod 被删除后,deployment 会再次拉取一个新的 pod,实现了 pod 的自愈功能,

- 因此想要彻底删除 pod,需要删除该 pod 所属 deployment,可通过 kubectl get deploy 获取 deployment 列表。

3. delete 删除 deployment

[root@master02 ~]# kubectl get deploy

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

nginx-master02-test 1 1 1 1 6m39s

nginx-test 1 1 1 1 45h

[root@master02 ~]# kubectl delete deploy nginx-master02-test

deployment.extensions "nginx-master02-test" deleted

[root@master02 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-test-7dc4f9dcc9-b5bqv 1/1 Running 0 45h

[root@master02 ~]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-test-7dc4f9dcc9-b5bqv 1/1 Running 0 45h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 47h

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-test 1 1 1 1 45h

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-test-7dc4f9dcc9 1 1 1 45h

deployment 被删除后,replicaset 与 pod 被一并删除

4. delete 删除 replicaset

[root@master02 ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-test-7dc4f9dcc9 1 1 1 45h

[root@master02 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-test-7dc4f9dcc9-b5bqv 1/1 Running 0 45h

[root@master02 ~]# kubectl delete rs nginx-test-7dc4f9dcc9

replicaset.extensions "nginx-test-7dc4f9dcc9" deleted

[root@master02 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-test-7dc4f9dcc9-9k652 0/1 ContainerCreating 0 22s

[root@master02 ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-test-7dc4f9dcc9 1 1 1 38s

[root@master02 ~]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-test-7dc4f9dcc9-9k652 1/1 Running 0 50s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 47h

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-test 1 1 1 1 46h

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-test-7dc4f9dcc9 1 1 1 50s

replicaset 被删除后,deployment 会重新创建一个 replicaset,保证 pod 的自愈机制不受影响

[root@master02 ~]# kubectl describe deploy nginx-test

Name: nginx-test

Namespace: default

CreationTimestamp: Wed, 15 Dec 2021 19:17:00 +0800

Labels: app=nginx-test

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=nginx-test

Replicas: 1 desired | 1 updated | 1 total | 1 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=nginx-test

Containers:

nginx:

Image: nginx:1.14

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: nginx-test-7dc4f9dcc9 (1/1 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 86s (x2 over 46h) deployment-controller Scaled up replica set n

ginx-test-7dc4f9dcc9 to 1

七、权限管理

1. 查看 pod 日志

#该 pod 创建在 node02 上

[root@master02 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

nginx-test-7dc4f9dcc9-9k652 1/1 Running 0 2m33s 172.17.65.3 192.168.3.13 <none>

#此时为匿名用户,无权限查看日志

[root@master02 ~]# kubectl logs nginx-test-7dc4f9dcc9-9k652

Error from server (Forbidden): Forbidden (user=system:anonymous, verb=get, resource=nodes, sub

resource=proxy) ( pods/log nginx-test-7dc4f9dcc9-9k652)

2. 为匿名用户绑定管理员角色

[root@master02 ~]# kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

clusterrolebinding.rbac.authorization.k8s.io/cluster-system-anonymous created

3. 访问(添加日志内容)

查看所在 node

[root@master02 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

nginx-test-7dc4f9dcc9-9k652 1/1 Running 0 5m12s 172.17.65.3 192.168.3.13 <none>

使用 node02 访问 172.17.65.3

[root@node02 cfg]# curl 172.17.65.3

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

4. 重新查看 node 日志

[root@master02 ~]# kubectl logs nginx-test-7dc4f9dcc9-9k652

172.17.65.1 - - [17/Dec/2021:09:22:34 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-"

1444

1444

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?